Feeding the machine

6th December 2024

Biological AI needs a fair and sustainable data supply. Oliver Vince and Glen Gowers explain how we can explore the planet’s biodiversity in a strategic and equitable way

Biotechnology is the science of taking biological ‘components’ – genes, proteins, organisms – and adapting, engineering or repurposing them to deliver a solution to a problem. The multi-trillion-dollar global bioeconomy is one of humanity’s few credible routes towards a clean, sustainable and healthy future for all.

However, decades of biotech successes have been accompanied by a growing sense of injustice over the distribution of the resulting profits and other benefits. The perceived practice of exploiting naturally occurring genetic material while failing to pay fair compensation to the community from which it originates has led to national and international regulations governing commercial access to genetic resources, most notably the Nagoya Protocol, which aims to ensure the benefits of genetic resources are shared properly with the countries in which they were found.

As a result, industry scaled back its commercial ‘bioprospecting’ and focused its research and development on the ever-growing amount of digital sequence information, or DSI, freely available on academic databases.

Dubious data

These databases have so far provided an imperfect but serviceable source of information to fuel progress in biotechnology, and are a ‘digital loophole’ – allowing anyone to explore and develop products from the data without the need to acknowledge where it came from or who produced it.

However, as the most recent issue of The Biologist shows, biotechnology is changing profoundly. It is now increasingly using data-hungry machine learning and AI models to generate ideas and answers. Across all the latest machine-learning and AI–based biological models, the same fundamental problem arises: the more advanced the model, the higher the quality and quantity of training data needed, and the less of this type of data is available. The outputs of these models are highly dependent on the information that they ingest – and DSI is not up to the task. It is hindering the vast potential and possibilities of this new technology.

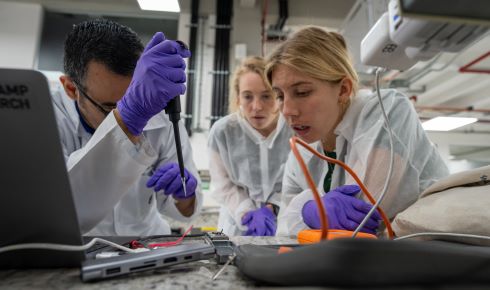

Basecamp’s sampling expeditions aim to gather vast amounts of new sequence data and metadata on the environmental context in which it was found.

Basecamp’s sampling expeditions aim to gather vast amounts of new sequence data and metadata on the environmental context in which it was found.

There are estimated to be over 1 trillion species on Earth. Yet half of all the microbial genomes available on public databases are from just 12 species. The majority of samples to date have been collected from the US, Europe and China, often by researchers all working on the same model species or within narrow seams of genetic diversity related to human health. But it is the absence of ‘biological context’ – describing the environment the genes, proteins and organisms evolved in – within public data that is likely to be the most significant limitation for the field.

What's more, the DSI ‘digital loophole’ is closing. The UN recently published its draft recommendations on a new mechanism for fairly sharing the multi-trillion-dollar revenues derived from DSI – a topic that has been subject to heated debate at the recent COP16 UN biodiversity talks. Major AI companies in other sectors – from music, images to internet search – are facing high-profile legal proceedings that challenge the way they have used data to train models without the consent of the parties who generated that data. Through forums such as COP16, similar scrutiny is befalling the groups training AI models for biology on public collections.

DSI databases are repositories for academic collaboration, rather than a concerted effort to strategically explore and understand the biodiversity of the planet. They have serious limitations as a source of training data for the computational models of the future. And the lack of a sustainable alternative threatens to hinder the huge potential of this new form of bioscience. A more equitable and inclusive approach to biodiscovery is needed.

Basecamp research in Costa Rica

Basecamp research in Costa RicaOur UK-based startup, Basecamp Research, is meticulously gathering primary data first-hand to build our models from the ground up. We have forged bilateral access and benefit-sharing relationships with biodiversity stakeholders in more than 20 countries, enabling the collection of genetic data from biodiversity at a pace, scale and quality not previously attempted.

These partnerships have allowed us to a collect a data set that far exceeds the size, quality and information content of all public DSI collections to date. In just a few years, our international sampling expeditions increased the number of proteins known to science by more than 10-fold.

Access to this data has enabled our models to outperform all foundational AI across protein function, structure and generation. Now, it’s letting us build completely new categories of foundational models that simply cannot be built on the public DSI collections.

We work with many of the top companies and researchers around the world. Public examples range from Procter & Gamble using the models to design enzymes for detergents, to Dr David R Liu’s laboratory at the Broad Institute of MIT and Harvard, with whom we’re developing fusion proteins and other large molecules to enable the next generation of genetic medicines.

Basecamp explores biodiversity hotspots and understudied ecosystems to find novel genetic sequences

Basecamp explores biodiversity hotspots and understudied ecosystems to find novel genetic sequences

As we see financial success, so do our biodiversity partners. We have invested millions of dollars into biodiversity partnerships to date and have already paid back royalties to a wide range of stakeholders.

Crucially, we also share non-monetary benefits, including training, transfer of technology and research collaboration. Deploying portable laboratories in each country gives a much greater control and consistency of sample choice, metadata collection and molecular biology techniques used. Working with local partners and scientists allows us to benefit from the experience and passion of experts who have a deep knowledge and understanding of the local biodiversity.

Providing training, technology and facilities ensures consistent, high-quality data comes back to us and is more effective, efficient and sustainable than operating ‘helicopter-science’ biodiscovery expeditions, where researchers fly in, take some samples and leave. The skills, facilities and resources are highly valued by communities. Molecular biology skills, portable labs and increased ‘bioliteracy’ can help advance local science such as conservation efforts, or infectious disease monitoring, and enable local populations to participate in the bioeconomy themselves.

The partnerships therefore lay the groundwork for a long-term shift to redress the technological imbalance between ‘user’ and ‘provider’ countries, and create economic and social incentives to value biodiversity.

Time for change

The growing demand for the vast quantities of high-quality genetic data needed to train large models can only be met by developing sustainable partnership-based data supply chains. These partnerships need to both align incentives and share benefits with the providers of biodiversity. The new era in biotechnology presents a natural opportunity to align commercial interests with development goals and our pressing need to value natural resources.

Glen and Oliver at Basecamp offices

Glen and Oliver at Basecamp offices

Companies which proactively - and positively - engage with the providers of the resources that they depend upon (either themselves, or through a specialist company like Basecamp Research) can more confidently navigate the coming regulatory minefield and will be rewarded with access to training data and, ultimately, far more sophisticated products than would be possible using only publicly available data.

Legal and sustainable access is key to realising the potential of AI in biology, and it just might help us finally understand the true value of the world’s biodiversity too.

For more on this topic see Vince and Gower's research article.

Oliver Vince and Glen Gowers are co-founders of Basecamp Research.